Opera One Local LLMs: A Game-Changer in AI Accessibility

Imagine the power of AI is just a few clicks away, right on your local device. Opera One Local LLMs Download is revolutionizing the way we interact with artificial intelligence. Opera has thrown open the gates to an extensive library of over 150 large language models (LLMs) from around 50 families for users to download and use locally on their computers. This groundbreaking move not only enhances privacy by processing data on-device but also provides unprecedented access to sophisticated AI tools like Meta’s Llama and Google’s Gemma.

What Are Local LLMs and Why Do They Matter?

Local LLMs are essentially AI-driven programs that reside and run directly on your personal computer, without the need to connect to external servers. This means all your data remains with you, giving you control over privacy and security. The significance of these models lies in their versatility – from composing emails to generating code, they’re equipped to handle complex tasks effortlessly. What’s more, with local processing, users can expect enhanced speed and reliability without worrying about internet connectivity issues or server downtimes.

Exploring the Diversity of Opera One’s AI Suite

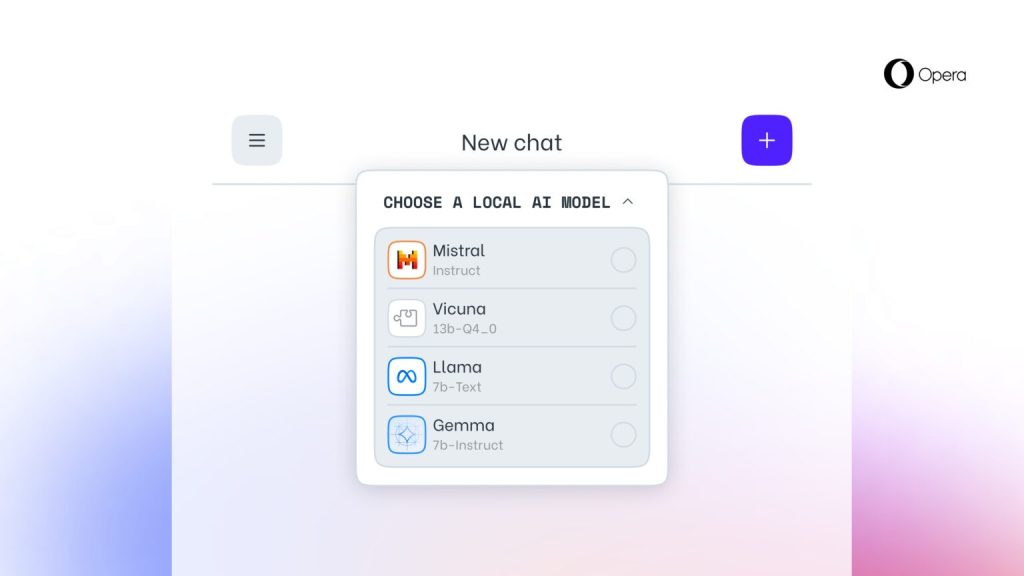

The suite of AI tools available via Opera One Local LLMs Download is nothing short of impressive. With heavyweights such as Vicuna alongside Meta’s Llama and Google’s Gemma, users have an arsenal of specialized models at their disposal. Each model brings something unique to the table – whether it be advanced reasoning skills or nuanced language understanding capabilities – catering to a variety of needs and preferences.

How to Harness the Power of Opera One Local LLMs

Step-by-Step Guide to Downloading and Running AI Models

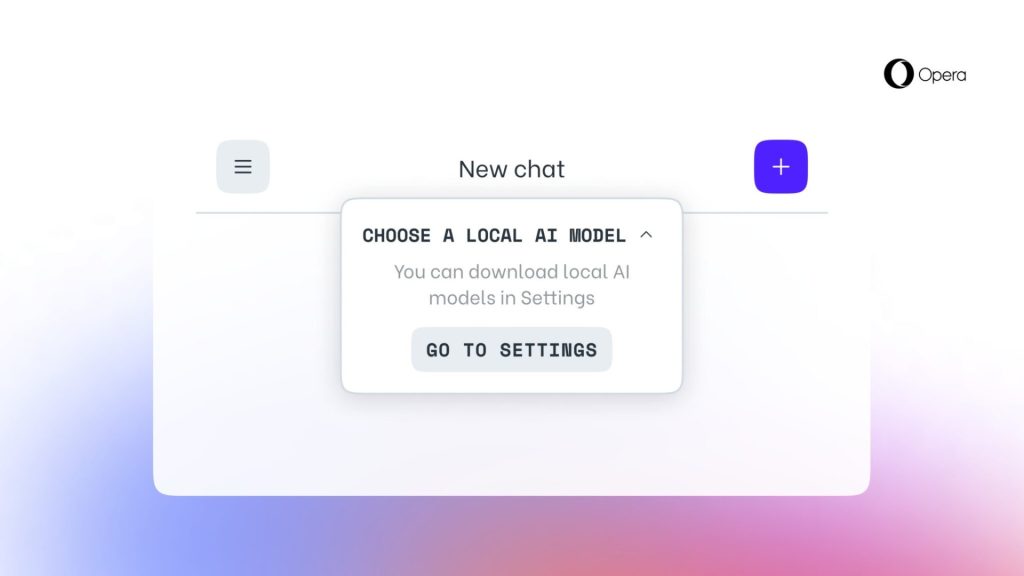

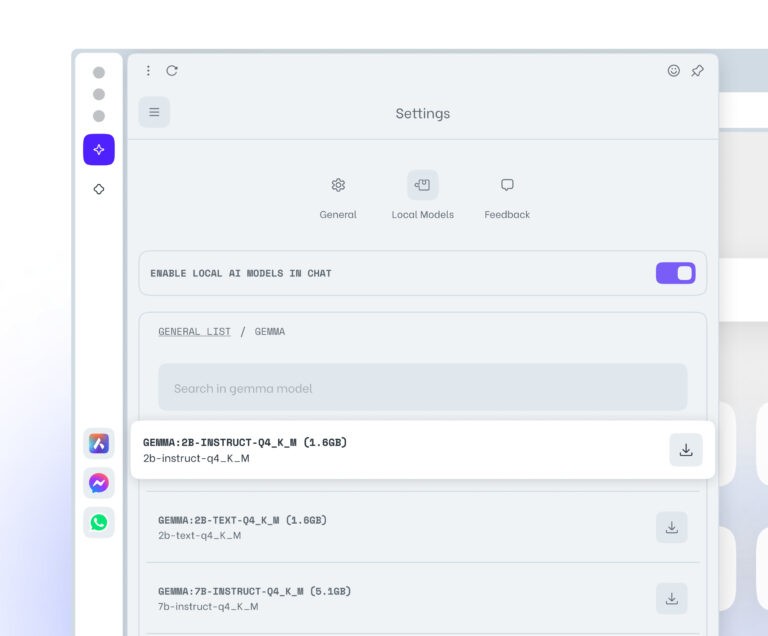

To tap into this wealth of AI resources, start by upgrading to the latest version of Opera Developer. Once installed, navigate through Aria Chat in the sidebar where you’ll find an option for ‘choose local mode’. From there, ‘Go to settings’ will lead you into a treasure trove where you can cherry-pick from numerous models ready for download. Remember though – each variant could take up a significant amount of space on your hard drive; so choose wisely!

Maximizing Efficiency with Ollama Framework

The Ollama framework serves as the backbone for running these diverse models efficiently on your local system. By leveraging this open-source platform integrated within Opera’s ecosystem, users benefit from seamless installation and operation experience across different types of LLMs without compatibility concerns.

The Future Is Here: Personalized AI at Your Fingertips

Customizing Your Experience with Various AI Families

Diving into the realm of personalized AI becomes thrilling when you realize that you can tailor your digital assistant according to your specific needs. Whether it’s Code Llama for developers or Mixtral for natural language processing tasks, there’s an array tailored for everyone’s niche requirements.

Why Opera One’s Local Solution Outshines Cloud-Based Services

In comparison with cloud-based services which rely on remote servers, Opera One’s local solution stands out by prioritizing user autonomy over data privacy while eliminating latency issues associated with server-based computations. This innovative approach ensures that every user enjoys a swift, secure, and highly personalized interaction with their chosen AI model.