Overview of MiniMax M2 & Agent

What is the MiniMax M2?

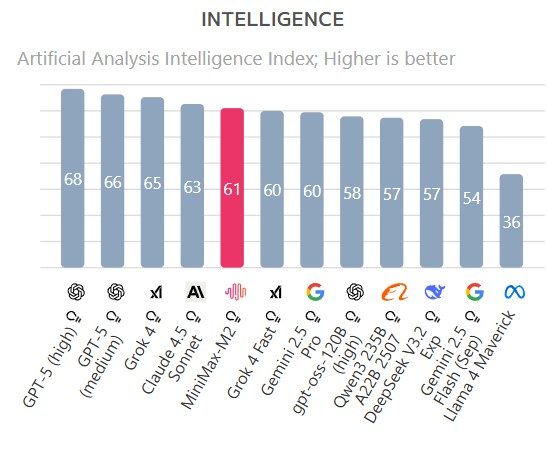

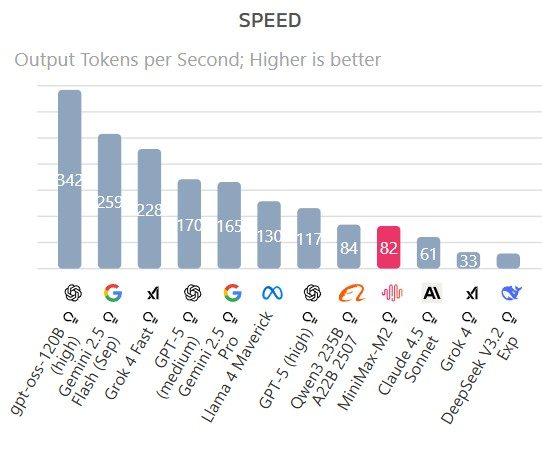

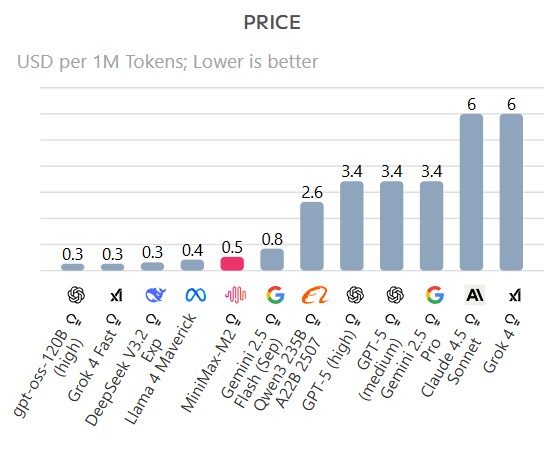

MiniMax M2 is a compact, high-performance AI model designed primarily for coding and agentic workflows. Built with a focus on efficiency, it features just 10 billion active parameters out of a total 230 billion, delivering near top-tier capabilities in tool use, programming, and complex reasoning. Its design prioritizes speed and cost-effectiveness, making it suitable for deployment in real-time agent applications. Compared to larger models like Claude 3.5 Sonnet, MiniMax M2 offers twice the inference speed at a fraction of the cost—around 8% of the price per million tokens, with a tokens-per-second rate nearing 100. Its versatility spans tasks from multi-file coding to long-chain tool orchestration, all while maintaining robust general intelligence.

Introduction to the Agent system

The Agent system built on MiniMax M2 emphasizes end-to-end workflows, combining programming, tool use, and search capabilities. It is designed to handle complex, long-horizon tasks such as web browsing, code execution, data retrieval, and multi-step problem-solving. The system supports two modes: Lightning Mode, optimized for speed and simple tasks, and Pro Mode, tailored for complex, long-duration tasks like research, software development, and report generation. The open-source nature of MiniMax M2 enables local deployment, fostering accessibility for developers and researchers aiming for efficient, scalable AI agents.

Design and Features of MiniMax M2

Innovative simplicity in design

MiniMax M2 adopts a streamlined architecture focused on balancing performance and deployment ease. Its core is a MoE (Mixture of Experts) model with 230 billion total parameters but only 10 billion activated during inference. This design reduces latency and computational overhead, enabling faster response times and higher throughput, ideal for real-time agent workflows. The simplicity lies in its targeted activation, which delivers sophisticated capabilities without the complexity and resource demands of larger models. This approach makes it easier to deploy and scale, especially in environments where responsiveness and cost are critical.

Key features and specifications

- Parameter Efficiency: 10 billion active parameters out of 230 billion total, optimizing inference speed and cost.

- Performance Benchmarks: Excelling in coding and agentic tasks, with competitive scores on SWE-bench (~69.4), Terminal-Bench (~46.3), and BrowseComp (~44).

- Multilingual and Multitask: Good performance across various languages and benchmarks, including programming, search, and reasoning.

- Open-Source & Deployability: Model weights are available on Hugging Face, with deployment guides for vLLM and SGLang frameworks.

- Pricing: API cost at $0.30 per million tokens input, $1.20 per million tokens output, supporting high throughput (~100 tokens/sec).

User-friendly interface

The system provides accessible APIs and open-source deployment options, making integration straightforward. The API is well-documented, supporting quick setup and testing. For local deployment, frameworks like vLLM and SGLang are recommended, with clear instructions provided. The design emphasizes simplicity in configuration—recommended inference parameters include temperature=1.0, top_p=0.95, top_k=20—ensuring consistent, high-quality outputs. Its ease of use extends to its tool-calling capabilities, facilitating complex task orchestration with minimal friction.

Performance and Functionality

Efficiency in operation

MiniMax M2 achieves a remarkable balance between speed and intelligence by focusing activation on only 10 billion parameters. This setup results in lower latency, higher throughput, and reduced costs, suitable for high-concurrency applications. Its inference speed of approximately 100 tokens per second outperforms many comparable models, enabling real-time agent responses. For example, in code development workflows, it can iterate through modify-run-fix cycles swiftly, significantly improving productivity in continuous integration setups.

Versatility and adaptability

Designed for multi-faceted tasks, MiniMax M2 handles programming, deep search, and tool integration seamlessly. It excels at multi-file edits, long-horizon planning, and orchestrating complex toolchains like browsing, code execution, and data retrieval. Its performance on benchmarks such as SWE-bench (~69.4) and BrowseComp (~44) demonstrates its ability to adapt across domains. The model is suitable for diverse environments, from lightweight chatbots to complex development agents, thanks to its flexible architecture and open deployment options.

Comparison with similar devices

| Feature | MiniMax M2 | Claude Sonnet 4 & 4.5 | Gemini 2.5 Pro | GPT-5 (thinking) |

|---|---|---|---|---|

| Active Parameters | 10B out of 230B | Larger models (~100B+) | ~230B | Unknown |

| Cost per Million Tokens | $0.30 input / $1.20 output | Significantly higher | Not specified | Not specified |

| Inference Speed | ~100 tokens/sec | Slower | Similar or slower | Likely slower |

| Key Strengths | Speed, cost, multitask, open-source | Performance on certain benchmarks | Coding and agentic tasks | General-purpose, large-scale |

| Deployment | Open-source, flexible frameworks | Proprietary or closed | Usually large-scale | Proprietary |

MiniMax M2 stands out for its targeted efficiency, making it a compelling choice for developers needing fast, cost-effective AI with strong programming and agentic capabilities.

Graphs credits: Artificial Analysis.

Applications and Use Cases of MiniMax M2

Home automation

MiniMax M2 can be integrated into smart home systems to enhance automation capabilities. Its fast inference speed and ability to handle complex multi-step tasks make it ideal for managing devices, optimizing energy use, and responding to voice commands efficiently. For example, it can coordinate lighting, climate control, and security systems through natural language interfaces, providing a seamless user experience. Home automation setups benefit from MiniMax M2’s low latency, ensuring real-time responses without lag, and its cost-effectiveness allows for scalable deployment across multiple devices.

Industrial integration

In industrial environments, MiniMax M2 supports automation, monitoring, and decision-making processes. Its agentic performance enables it to analyze sensor data, troubleshoot issues, and execute commands across machinery and systems with minimal delay. For instance, it can oversee factory operations by coordinating between different tools and systems, diagnosing faults, and suggesting corrective actions. Its ability to plan and execute long-chain tool calls helps optimize workflows, reduce downtime, and improve safety. The model’s efficiency and speed make it suitable for deployment in resource-constrained industrial settings, where quick, reliable responses are critical.

Smart building management

MiniMax M2 excels in managing large-scale building systems that require continuous oversight and coordination. It can handle tasks like scheduling maintenance, adjusting environmental controls, and managing security protocols. Its capability to perform in-depth research and long-horizon planning supports proactive management strategies. For example, it can analyze energy consumption patterns, recommend adjustments, and automate responses to occupancy changes. Its low deployment cost and high throughput make it feasible for large campuses or commercial complexes, delivering intelligent control with minimal infrastructure overhead.

Why Choose MiniMax M2 & Agent?

Advantages of simplicity

MiniMax M2’s design emphasizes simplicity without sacrificing performance. Its streamlined architecture enables easier deployment and maintenance, reducing the complexity often associated with larger models. For users, this translates into faster setup times, straightforward integration, and less need for specialized hardware, making it accessible even for smaller teams. Simplified workflows help developers focus on building solutions rather than managing infrastructure, boosting overall productivity.

Cost-effectiveness

MiniMax M2 delivers an impressive balance of speed, performance, and price. Priced at just 8% of comparable overseas models like Claude Sonnet, it offers nearly double the inference speed at a fraction of the cost. Its efficient use of 10 billion activated parameters ensures lower latency and higher throughput, which directly translates to reduced operational expenses. For example, deploying multiple agents for complex tasks becomes financially feasible, enabling broader adoption of advanced AI capabilities without breaking the bank.

Scalability and future-proofing

Built with scalability in mind, MiniMax M2 can be deployed locally, on cloud, or integrated into existing systems with ease. Its open-source model weights facilitate customization and continuous improvement. The model’s architecture supports expanding capabilities, such as adding new tools or integrating with additional systems, making it adaptable to future needs. As AI technology evolves, MiniMax M2’s flexible deployment options and robust performance ensure it remains relevant and capable of supporting increasingly complex workflows.

Frequently Asked Questions about MiniMax M2

What makes MiniMax M2 different from larger AI models?

MiniMax M2 is designed for efficiency, activating only 10 billion parameters out of 230 billion total. This streamlined setup offers faster inference speeds and lower costs, making it ideal for real-time agent workflows without sacrificing performance.

How does MiniMax M2 perform in coding and agentic tasks?

MiniMax M2 excels at programming, tool use, and complex reasoning tasks. Its performance on benchmarks like SWE-bench (~69.4) and BrowseComp (~44) shows it’s well-suited for multi-file coding, long-horizon planning, and orchestrating multi-step workflows efficiently.

Is MiniMax M2 suitable for deployment in different environments?

Yes, MiniMax M2 is open-source and supports local deployment via frameworks like vLLM and SGLang. Its flexible architecture makes it adaptable for various environments, from lightweight chatbots to industrial automation systems.

Why is MiniMax M2 considered cost-effective?

MiniMax M2 costs around $0.30 per million tokens input and $1.20 per million tokens output, offering high throughput (~100 tokens/sec). Its efficient design reduces operational expenses while maintaining strong AI capabilities.

Can MiniMax M2 handle complex, multi-step tasks?

Absolutely! MiniMax M2 is built to manage long-horizon tasks like web browsing, code execution, and data retrieval, thanks to its agent system and ability to orchestrate multi-step tool calls seamlessly.

Sources: Minimax, Hugging Face, Reddit, Artificial Analysis.