Google has just rolled out an upgraded preview of Gemini 2.5 Pro. This latest iteration builds on the foundation laid by previous versions, especially the I/O Edition released last month, which already showcased promising improvements in coding capabilities. The update isn’t just about incremental tweaks; it signals Google’s commitment to refining both performance and usability, making Gemini 2.5 Pro more versatile for diverse applications—ranging from complex coding tasks to reasoning-heavy benchmarks.

As Google emphasizes, this upgrade aims to bridge gaps noted in earlier releases by enhancing “style and structure,” allowing the model to generate responses that are not only accurate but also more creative and well-formatted.

Table of Contents

Gemini 2.5 Pro upgrade: What’s new and why it matters

The upgrade’s timing is notable because it’s set for general availability within a few weeks, signaling a move toward broader adoption across enterprise and developer communities through platforms like Google AI Studio and Vertex AI. This rollout also coincides with a series of UI tweaks on the Gemini app for Android, including gestures like swipe left to activate Gemini Live—a feature designed to streamline user interaction and accessibility. These updates reflect Google’s holistic approach: improving backend AI models while simultaneously refining user interfaces to ensure smoother experiences.

Overview of the Gemini 2.5 Pro upgrade

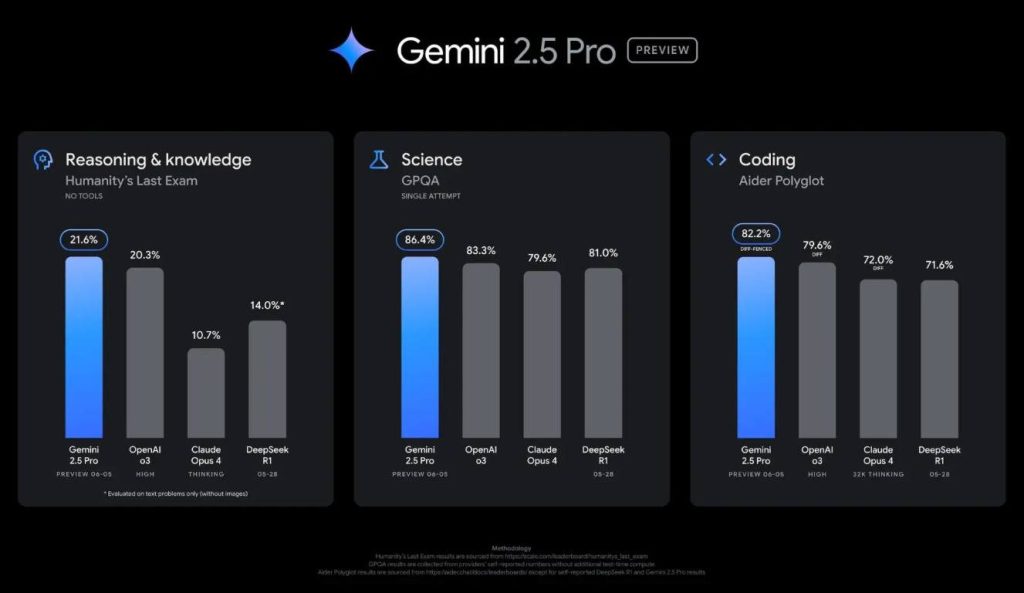

The core of the Gemini 2.5 Pro upgrade lies in its enhanced technical capabilities combined with refined response quality. Building on the previous version (05-06), Google’s latest preview introduces improvements aimed at tackling complex benchmarks such as GPQA and Humanity’s Last Exam (HLE), which test models’ skills in math, science, reasoning, and knowledge retention. Furthermore, this version leverages feedback from early users who observed performance declines outside specific domains—especially creative writing or structured responses—and addresses these issues directly.

This upgrade is part of Google’s broader strategy to make Gemini a competitive player against other advanced AI models like OpenAI’s GPT series or Anthropic’s Claude. The company continues emphasizing that the model now exhibits “top-tier performance” across key benchmarks while maintaining agility in multi-task scenarios such as coding or scientific problem-solving.

How the upgrade impacts AI performance and usability

One standout aspect of this Gemini 2.5 Pro upgrade is its noticeable improvement in performance metrics, particularly Elo scores—a common way to quantify an AI model’s competency in benchmark competitions like LMArena or WebDevArena. Google reports a 24-point Elo score jump on LMArena alone, pushing Gemini’s score up to 1470 points; similarly, there’s a 35-point rise leading on WebDevArena at 1443 points.

Beyond raw scores, these enhancements translate into tangible benefits for users: more accurate code generation, better understanding of nuanced questions, and responses that are clearer and more creatively structured. Developers utilizing APIs via Google’s platform now have finer control over latency and costs thanks to features like thinking budgets introduced with the update—making it easier to deploy high-performance models without overspending.

The refinements aren’t purely technical; they also bolster usability by making interactions feel more natural. The model’s responses are now better formatted with improved style elements—an aspect highlighted by Google itself—which helps foster trust and ease of use when integrating into real-world workflows.

Performance boost: Elo score jumps by 24 points on LMArena

Understanding Elo scores in AI benchmarks

Elo scores originated from chess ratings but have since been adopted widely across competitive fields—including AI benchmarking—to objectively measure skill levels over time against various opponents or standards. In this context, a higher Elo indicates stronger performance on specific tasks or tests designed around problem-solving ability, language comprehension, logical reasoning, or domain-specific expertise.

For AI models like Gemini 2.5 Pro, these scores are derived from standardized tests such as LMArena—an online leaderboard evaluating language models’ abilities across multiple languages—and WebDevArena focused on coding proficiency across programming languages. An increase in Elo score reflects improvements not just in raw accuracy but also robustness against tricky prompts or edge cases.

Details of the Elo score increase and what it signifies

With this new preview version rolling out ahead of full launch, Google reports a 24-point increase on LMArena—from approximately 1446 previously to about 1470 now—which is quite significant given how competitive these leaderboards tend to be[source]. Similarly, Gemini leads WebDevArena with an impressive climb of 35 points at 1443 points overall.

Such jumps imply that the upgraded model handles challenging questions better than before—whether it’s complex scientific queries or nuanced language understanding—and suggests meaningful progress toward surpassing prior benchmarks for large language models (LLMs). It’s worth noting that these gains come after addressing earlier feedback where some outside-of-coding performance suffered compared to earlier models like those from March (03-25).

Comparison with previous versions and competitors

Compared against older iterations such as Gemini’s initial release late last year or competing systems like OpenAI’s GPT-4 or Anthropic’s Claude v3.x series—which often showcase similar leaderboard performances—the recent upgrades place Gemini firmly among top performers for multi-domain tasks[see /tag/benchmarking].

The steady climb in Elo scores indicates both ongoing refinement at Google Labs’ end and an increasingly capable model ready for deployment beyond experimental phases into real-world enterprise solutionsmore about Google Labs. While exact comparisons can vary depending on test conditions—for instance, some models might excel at certain niche benchmarks—the consistent upward trend highlights how targeted upgrades can yield measurable gains across multiple evaluation metrics.

Dominating coding benchmarks like Aider Polyglot with Gemini 2.5 Pro

The significance of Aider Polyglot benchmark

Among various coding benchmarks used today—such as HumanEval or BigCode—the Aider Polyglot stands out because it assesses multilingual coding prowess simultaneously across dozens of programming languages[see /tag/coding-benchmarks]. For developers seeking versatile tools capable of handling Python alongside JavaScript, Java, C++, Rust etc., excelling here signals broad competence rather than niche specialization.

Google specifically notes that Gemini 2.5 Pro maintains leadership status “on difficult coding benchmarks like Aider Polyglot,” underscoring how well it manages multi-language code generation challenges under diverse contextsmore about Aider Polyglot. This is especially relevant given industry demands for AI assistants that can seamlessly switch between languages without sacrificing accuracy—a vital feature for integrated development environments (IDEs) or automated code review systems.

How Gemini 2.5 Pro outperforms in multi-language coding tasks

The updated model demonstrates superior capacity not only through higher accuracy but also via enhanced contextual understanding when working within multi-language projects—parsing different syntax rules correctly while generating syntactically valid code snippets rapidly becomes crucial here[see /tag/multi-language].

In practical terms:

- It produces cleaner code blocks aligned with best practices.

- Handles edge cases involving mixed-language files.

- Adapts quickly when prompted with varied programming paradigms.

Such advances mean developers can rely more heavily on automations powered by Gemini without fearing inconsistent outputs during critical stages like debugging or refactoring sessions.

Implications for developers and tech enthusiasts

For software engineers actively involved in open-source projects or enterprise software development (developers), these improvements translate into increased efficiency: fewer manual corrections needed post-generation means faster workflows overall.More about developer tools Moreover, being able to confidently deploy an AI capable of multilingual code synthesis opens doors for cross-platform projects where native language support matters most—for example integrating Python-based machine learning modules with front-end JavaScript apps effortlessly.

Tech enthusiasts benefit too—they get access to a cutting-edge tool that’s clearly setting new standards not only regarding raw benchmark numbers but also practical usability across diverse programming ecosystems[see /tag/developer-tools].

Frequently asked questions on Gemini 2.5 Pro upgrade

What are the main improvements introduced in the Gemini 2.5 Pro upgrade?

The Gemini 2.5 Pro upgrade brings significant enhancements in performance, including a 24-point Elo score jump on LMArena, better coding capabilities like excelling in the Aider Polyglot benchmark, and improved response quality with more creative and well-structured outputs. It also addresses previous limitations outside specific domains such as creative writing and structured responses, making it more versatile for various tasks.

How does the Gemini 2.5 Pro upgrade impact AI benchmark scores?

The upgrade notably increases Elo scores—Google reports a rise of 24 points on LMArena and 35 points on WebDevArena—indicating stronger overall performance in language understanding, reasoning, and coding tasks. These score improvements reflect the model’s enhanced ability to handle complex questions and diverse benchmarks, positioning it among top performers in multi-domain evaluations.assistance across various programming languages and workflows.

When will the Gemini 2.5 Pro be generally available to users?

Google has announced that the Gemini 2.5 Pro upgrade will be available within a few weeks, with broader adoption expected through platforms like Google AI Studio and Vertex AI. The rollout aims to bring this advanced model into enterprise use and developer communities worldwide soon.

What makes Gemini 2.5 Pro stand out compared to other AI models like GPT-4 or Claude v3.x?

The recent Gemini 2.5 Pro upgrade demonstrates competitive performance by leading in key benchmarks such as Elo scores on LMArena and WebDevArena, especially excelling in multi-language coding tasks like Aider Polyglot. Its continuous scoring improvements show its potential to rival or surpass other top-tier models across multiple domains.

How does the Gemini 2.5 Pro upgrade improve multi-language coding abilities?

The update enhances contextual understanding across different programming languages—producing cleaner code snippets, handling edge cases involving mixed languages, and adapting swiftly to varied paradigms—making it highly effective for cross-platform development environments where multilingual code generation is vital.