In recent months, Meta has shown its aggressive push into Meta superintelligence. The company’s strategy appears to center around assembling a powerhouse team of AI experts, many of whom come from leading labs like OpenAI, Google DeepMind, and Microsoft. This effort signals a clear intent to develop cutting-edge AI capabilities that could rival or even surpass those of industry giants. By hiring high-profile talent and investing heavily in research, Meta aims to carve out a significant position within the rapidly evolving field of artificial general intelligence (AGI).

Meta’s Bold Push into Superintelligence

The strategic vision behind Meta superintelligence

Meta’s overarching goal with Meta superintelligence is to integrate highly capable AI models across its ecosystem—be it social platforms, augmented reality devices like Oculus headsets or future AR glasses, or even enterprise solutions. At its core, this vision involves creating AI systems that are not only powerful but also adaptable across multiple modalities such as text, voice, and images.

According to insiders familiar with Meta’s plans (source), the company’s focus is on developing multimodal models that can reason and generate synthetic data with greater accuracy than current offerings. These models could revolutionize how users interact with technology—imagine voice-activated AR glasses seamlessly understanding complex commands or generating contextual visuals on-demand.

The strategic emphasis on Meta superintelligence aligns closely with Zuckerberg’s long-term ambition: turning Meta into a leader in immersive experiences powered by intelligent systems. It reflects a recognition that social media alone isn’t enough; instead, integrating AI-driven hardware and software will be key to dominating next-gen digital interactions.

How Meta aims to compete with AI giants

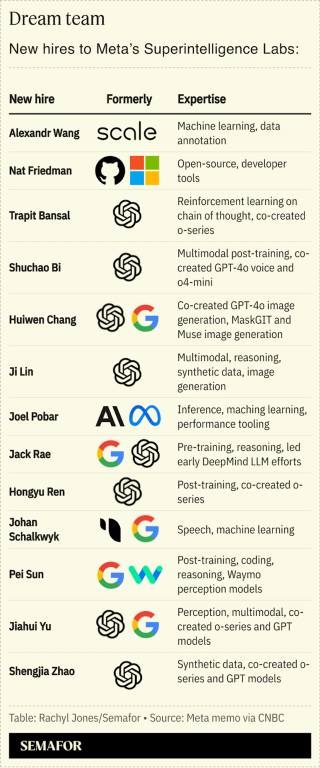

Competing against established titans like OpenAI and Google requires more than just hiring talent—it demands innovation at scale. With recent hires from OpenAI, ScaleAI, Microsoft (microsoft.com), and Google (google.com), Meta is positioning itself as an emerging force in Meta superintelligence development.

OpenAI supplied the largest share of new recruits—many ex-OpenAI researchers bring expertise in large language models (LLMs), perception systems, and synthetic data creation. For example, hiring Pei Sun—who developed perception models for Waymo—indicates an interest in integrating advanced perception capabilities directly into hardware like AR glasses or VR headsets.

Moreover, Meta’s significant investment—$15 billion—in ScaleAI underscores its commitment to improving data labeling and model training efficiency. ScaleAI’s CEO Alexandr Wang now serves as Chief AI Officer at Meta overseeing this ambitious push alongside former GitHub CEO Nat Friedman.

In addition to talent acquisition, Meta is actively developing proprietary models aimed at multimodal reasoning—a step beyond traditional LLMs. These efforts suggest they want systems capable of understanding context across different modalities simultaneously rather than isolated tasks.

Furthermore, the company’s focus on integrating AI into hardware products indicates an ambition not just for software supremacy but also for shaping the future landscape of augmented reality devices powered by Meta superintelligence.

Key Hires from Leading AI Labs for Meta superintelligence

Profiles of ex-OpenAI experts joining Meta

One of the most talked-about hires is Pei Sun; his background includes developing perception systems at Waymo—a clear signal that visual processing plays a crucial role in Meta’s plans. His expertise points toward building robust perception modules for AR devices where understanding real-world environments accurately can redefine user experiences (source).

Other ex-OpenAI staff brought in include researchers who have worked on foundational language model architectures similar to GPT-4 or ChatGPT. Their skill sets enable Meta to accelerate development in natural language understanding and reasoning capabilities essential for Meta superintelligence projects aimed at multimodal applications involving speech synthesis and comprehension.

These professionals often have experience working on safety protocols around AGI development as well as scaling large neural networks efficiently—a critical aspect given concerns about managing complex autonomous systems safely.

Talent from Scale, Microsoft, and Google shaping Meta’s future

From ScaleAI comes Alexandr Wang—now serving as Chief AI Officer—which provides Meta access to top-tier data labeling infrastructure necessary for training massive models reliably (tag:ArtificialIntelligence). Wang’s expertise helps optimize data pipelines essential for building scalable Meta superintelligence solutions.

Microsoft contributes talent experienced in deploying cloud-based machine learning services at scale—these skills are vital when training multi-modal models requiring immense computational resources (source). Meanwhile, Google’s DeepMind alumni bring insights into reinforcement learning techniques that could enhance reasoning abilities within these new systems.

Together these hires form a diverse team blending perception engineering (from OpenAI veterans), data science mastery (from Scale), cloud infrastructure expertise (from Microsoft), and advanced RL techniques (from DeepMind). This cross-pollination increases the likelihood of breakthroughs needed for truly versatile Meta superintelligence, capable of reasoning across different contexts seamlessly.

What these hires mean for Meta superintelligence initiatives

The influx of top-tier talent signals that meta is serious about closing gaps with competitors by leapfrogging existing limitations within their AI tech stack. Internal sources suggest that these hires are part of both short-term product goals—and longer-term visions—for multi-modal understanding fueled by sophisticated reasoning engines.

However, industry watchers warn that bringing together such high-powered individuals isn’t without risks; internal dynamics could challenge collaboration efforts (source). As Helen Toner pointed out during her interview with Bloomberg: “It’ll be hard”—particularly managing egos among such distinguished researchers if organizational politics hinder progress towards shared goals under Meta superintelligence initiatives.

Nonetheless, Zuckerberg’s leadership remains committed: “We’re building something transformative,” he reportedly said during recent internal meetings. If successful, these efforts could fundamentally change how humans interact with machines—not just through social media but via embedded intelligent interfaces everywhere.

| Key Hires | Background & Expertise | Role & Focus Area |

|---|---|---|

| Pei Sun | Perception modeling (Waymo) | Visual & sensor integration |

| Researchers from OpenAI | Language & reasoning models | Multimodal model development |

| Alexandr Wang | Data labeling & infrastructure | Scalable training pipelines |

| Former DeepMind engineers | Reinforcement learning techniques | Reasoning & autonomous decision-making |

This convergence highlights how diverse skill sets—from perception engineering to large-scale model training—are converging under Meta superintelligence, aiming ultimately at creating adaptable AGI-like systems integrated into daily life.

Frequently asked questions on Meta superintelligence

What is Meta superintelligence and why is Meta investing heavily in it?

Meta superintelligence refers to the company’s efforts to develop highly advanced AI systems capable of reasoning, perception, and multimodal understanding that could rival or surpass current industry leaders. By investing heavily—such as hiring top talent from OpenAI, Google, and Microsoft—Meta aims to position itself as a major player in artificial general intelligence (AGI). Their focus is on building adaptable, powerful models that can be integrated across social media platforms, hardware like AR glasses, and enterprise solutions. This move signals Meta’s ambition to set new standards for what social media companies can achieve with superintelligent systems.

Who are some of the key hires contributing to Meta superintelligence?

Meta has brought in several prominent experts from leading AI labs. Notable among them is Pei Sun, formerly at Waymo, specializing in perception models crucial for AR devices. Researchers from OpenAI with experience in language models like GPT-4 have also joined, helping accelerate natural language understanding and reasoning capabilities. Additionally, talents from ScaleAI—including CEO Alexandr Wang—bring expertise in data labeling infrastructure essential for training large-scale models. Former DeepMind engineers contribute reinforcement learning techniques aimed at improving autonomous decision-making within Meta superintelligence projects.