The DeepSeek R1 update has marked a significant evolution when it comes to reasoning and logic capabilities. This latest iteration, known as DeepSeek-R1-0528, demonstrates remarkable improvements over its predecessors. With these enhancements, DeepSeek positions itself as a serious contender among top-tier models from giants like OpenAI and Google. Its ability to perform complex reasoning tasks with higher accuracy and fewer hallucinations has captured attention across both industry and academia, making it an important development in the landscape of artificial intelligence.

Table of Contents

DeepSeek R1 Update: A New Benchmark in AI Reasoning

What Makes the DeepSeek R1 Update Stand Out

The core strength of the DeepSeek R1 update lies in its strategic focus on reasoning depth. Unlike earlier versions that relied heavily on increased data or larger architectures alone, this update emphasizes algorithmic optimization during post-training phases combined with more computational resources. The result? A model capable of deeper inference processes, handling more tokens per query to better understand nuanced problems.

One key aspect is how this update improves performance without drastically increasing training costs—a point often emphasized by DeepSeek in their approach. They’ve managed to enhance reasoning while maintaining efficiency, making their model practical for many applications including enterprise-level solutions and custom deployments via cloud providers like Amazon Web Services (AWS) or Microsoft Azure. This flexibility is appealing for companies seeking more control over their data—something deeply valued in today’s privacy-conscious environment.

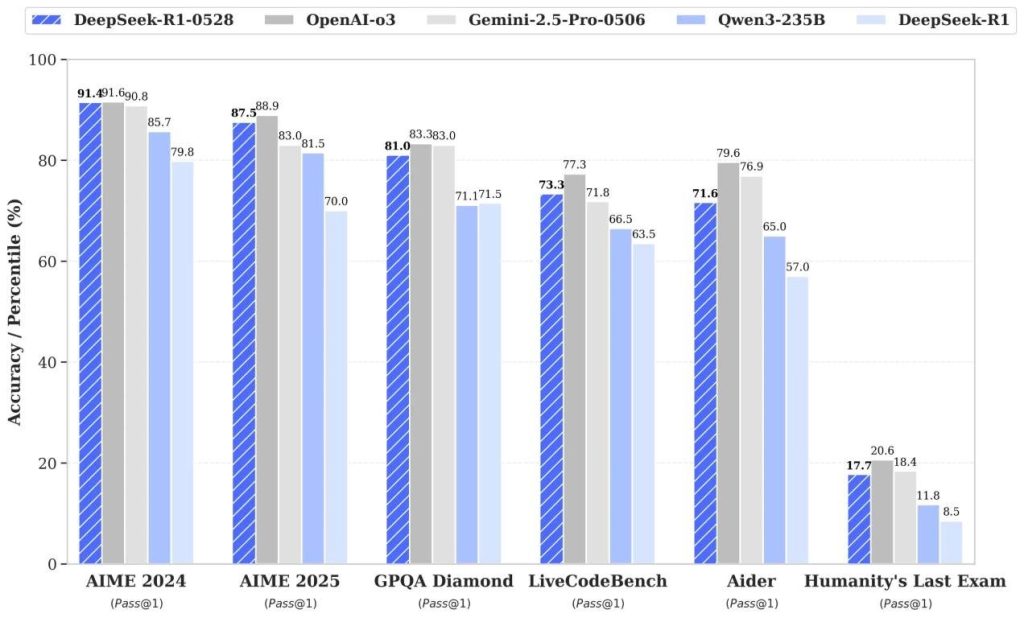

Furthermore, comparisons with other models underscore its improved reasoning abilities: “DeepSeek’s upgraded model now rivals top models such as Gemini 2.5 Pro from Google or OpenAI’s o3,” notes industry analyst reports. The update also reduces hallucination rates—a common problem where AI generates plausible but false information—thus boosting trustworthiness across applications that require high factual accuracy.

Key Improvements Over Previous Versions

The updates introduced in DeepSeek-R1-0528 reflect a thoughtful refinement rather than a complete overhaul. Here are some critical areas where improvements stand out:

| Aspect | Previous Version | Latest (R1-0528) | Impact |

|---|---|---|---|

| Reasoning Depth | Moderate | Significantly enhanced | Better handling of multi-step logic problems |

| Tokens per Query | ~12K | ~23K | Greater context retention for complex tasks |

| Math & Logic Accuracy (AIME 2025) | 70% | 87.5% | Higher success rate on benchmark tests |

| Hallucination Rate | Higher | Reduced significantly | Increased reliability in factual responses |

| Function Calling Support | Basic support | Enhanced & seamless integration | Better automation and task execution |

These advancements are driven by increased computational resources dedicated during post-training optimization—an approach that balances performance gains with manageable costs. They’ve also integrated mechanisms like chain-of-thought prompting within training routines, which helps the model reason step-by-step through complex problems instead of jumping to conclusions prematurely.

Moreover, the model’s capacity to process longer input sequences allows it to maintain context over extended conversations or lengthy logical chains—a feature that profoundly impacts real-world applications such as coding assistance or scientific research.

Real-World Applications and Impact

The improvements heralded by DeepSeek R1 update aren’t just academic; they have tangible implications across various sectors. In software development environments where natural language prompts are used for coding (“vibe coding”), these enhancements translate into more accurate code generation and debugging assistance—capabilities highlighted by PYMNTS.

In educational contexts like mathematics competitions (e.g., AIME), the leap from 70% to 87.5% accuracy means fewer errors when solving complex problems automatically—potentially reducing human effort and accelerating learning processes. Similarly, industries relying on logical inference such as finance analytics or legal document analysis benefit from deeper understanding provided by this updated model.

Beyond individual use cases, the open-source nature under MIT License makes DeepSeek-R1 accessible for customization and integration into proprietary systems without licensing fees—empowering organizations globally to implement high-quality reasoning AI while retaining full control over their data security.

This move towards more accurate reasoning models also influences future research pathways: “The chain-of-thought techniques distilled from DeepSeek-R1 could catalyze breakthroughs in small-scale models,” suggests AI researcher communities looking at how these methods can be adapted beyond massive architectures.

Enhanced Reasoning and Logic Capabilities in DeepSeek R1 Update

The DeepSeek R1 update isn’t just about raw power; it fundamentally enhances how models think through problems—mimicking human-like reasoning patterns with precision and depth.

Advanced Problem-Solving Skills

One standout feature is how well DeepSeek-R1 now tackles multi-faceted tasks requiring layered logic steps. For instance, when faced with intricate mathematical puzzles or programming challenges, it not only produces correct answers more frequently but does so by following multi-stage inference processes akin to human problem-solving strategies.

This is partly due to its expanded token limit per question (~23K tokens), which allows it to hold extensive contextual information necessary for complex deductions—a crucial factor highlighted during evaluations on benchmark sets like AIME 2024/2025 [source].

Improved Contextual Understanding

Another core upgrade involves contextual comprehension—the ability for the model to retain pertinent details throughout long passages of text or extended dialogues. This results in less context loss and fewer misunderstandings when interpreting ambiguous queries.

For example, in open-ended conversations about technical topics or nuanced scenarios, DeepSeek-R1 exhibits an improved grasp that feels more intuitive than previous versions. Its ability to integrate background information seamlessly leads to responses that are coherent and aligned with user expectations—even over prolonged interactions.

Handling Complex Logical Tasks with Ease

Handling complex logical tasks involves not just understanding but also executing steps correctly under multiple constraints—a demanding challenge even for advanced LLMs [see https://huggingface.co/deepseek-ai/DeepSeek-R1-0528]. The upgraded version excels here because it employs sophisticated mechanisms inspired by chain-of-thought prompting techniques that encourage stepwise reasoning within its architecture.

This capability was vividly demonstrated during benchmark tests like HMMT 2025 or CNMO 2024 where answer accuracy soared relative to prior versions—highlighting its advanced deductive skills even when juggling multiple variables simultaneously.

In conclusion—and echoing insights shared at recent conferences—the DeepSeek R1 update exemplifies a meaningful leap forward in developing AI systems capable of genuine reasoning rather than surface-level pattern matching alone [source]. It paves the way toward smarter solutions tailored for industries demanding high-stakes decision-making based on logical inference.

Frequently asked questions on DeepSeek R1 update

What are the main improvements introduced in the DeepSeek R1 update?

The DeepSeek R1 update brings several notable enhancements, especially in reasoning depth and context handling. It significantly improves multi-step logic processing, increases tokens per query from around 12K to 23K, and boosts accuracy on benchmarks like AIME 2025 from 70% to 87.5%. Additionally, hallucination rates are reduced, making responses more reliable. These upgrades enable the model to perform complex reasoning tasks more effectively while maintaining efficiency and cost-effectiveness.

Why is the reasoning improvement in DeepSeek R1 significant for real-world applications?

Enhanced reasoning means AI can handle more complex problems with greater accuracy—crucial for fields like coding assistance, scientific research, finance analytics, or legal analysis. For example, its ability to process longer inputs without losing context makes it ideal for extended conversations or detailed document analysis. The reduction in hallucinations also boosts trustworthiness across applications that demand high factual precision.

Will the DeepSeek R1 update impact future AI research or development?

Absolutely! The advancements seen with the DeepSeek R1 update—particularly its chain-of-thought prompting techniques and improved logical reasoning—are likely to influence future AI models. Researchers believe these methods can be scaled down for smaller models or integrated into various industries to push forward smarter decision-making tools. As one expert noted, “The chain-of-thought techniques distilled from DeepSeek-R1 could catalyze breakthroughs in small-scale models.”

What makes the DeepSeek R1 update stand out compared to other open-source models?

The DeepSeek R1 update stands out because it combines algorithmic improvements with efficient use of computational resources to enhance reasoning without drastically increasing costs. Its ability to perform multi-step logic tasks accurately while reducing hallucinations sets it apart from other open-source options. Plus, its open licensing under MIT License makes customization accessible for organizations seeking high-quality reasoning AI solutions.

Can I use the DeepSeek R1 update for enterprise-level projects?

Yes! The flexibility of the DeepSeek R1 update allows integration into cloud platforms like AWS or Azure, making it suitable for enterprise deployments. Its improved performance on complex reasoning tasks ensures better automation and decision-making support across various sectors—from finance to education—while maintaining control over data privacy and security.