The debut of the Llama API marks an important stride for Meta in the competitive landscape of artificial intelligence. This new API aims to provide developers with unprecedented access to advanced AI models, offering a palette of powerful tools designed to enhance creativity and efficiency in various applications. The Llama API’s introduction comes during a significant period for Meta as it seeks to solidify its position within the rapidly evolving AI space, while also addressing the challenges posed by competitors like OpenAI and Google.

What is the Llama API?

Overview of Meta’s AI Models

Meta’s Llama series has already made waves in the AI community, amassing over a billion downloads since its inception. These models are celebrated for their versatility and robust performance across multiple tasks. The Llama API allows developers to harness this power more effectively than ever before, providing them with the ability to build custom applications that leverage Llama’s unique capabilities. This initiative signals a transition for Meta from merely being an open-source model provider to becoming a full-fledged service-oriented company in the AI sector.

The announcement came during Meta’s inaugural LlamaCon, where they showcased not only their latest offerings but also their commitment to fostering an ecosystem that supports innovation among developers. By integrating tools such as fine-tuning functionalities and performance evaluation suites into this API, Meta encourages experimentation and adaptability within its developer community.

Key Features of the Llama API

So, what makes the Llama API stand out? Here are some key features:

High-Speed Inference: With partnerships established with Cerebras and Groq, developers can experience inference speeds up to 18 times faster than traditional GPU solutions. This is crucial for real-time applications where latency can impact user experience.

Custom Model Support: Developers have access to tools that allow them to generate data, train models based on specific needs, and evaluate performance efficiently.

Data Privacy Assurance: One noteworthy aspect is Meta’s commitment not to use customer data from the Llama API for training its own models. Users can transfer their custom models built on this platform seamlessly without vendor lock-in concerns.

Accessibility Options: Whether you’re using Cerebras or Groq’s technology under-the-hood, selecting these options within the API setup provides a streamlined development experience.

These attributes make it clear that Meta is positioning itself strategically within a competitive market by enhancing usability while maintaining high ethical standards regarding data handling.

How to Access the Llama API

Limited Preview Details

Currently available as part of a limited preview rollout, interested developers will need to register early access through Meta’s designated channels. This early phase aims at gathering feedback while refining functionality before broader availability is announced later this year.

The initial focus appears primarily on those willing to explore rapid prototyping opportunities using cutting-edge technologies provided by partners like Cerebras Systems. As highlighted in recent discussions around this launch at Meta, there’s an expectation that once various adjustments are made based on developer insights, wider access will follow suit swiftly.

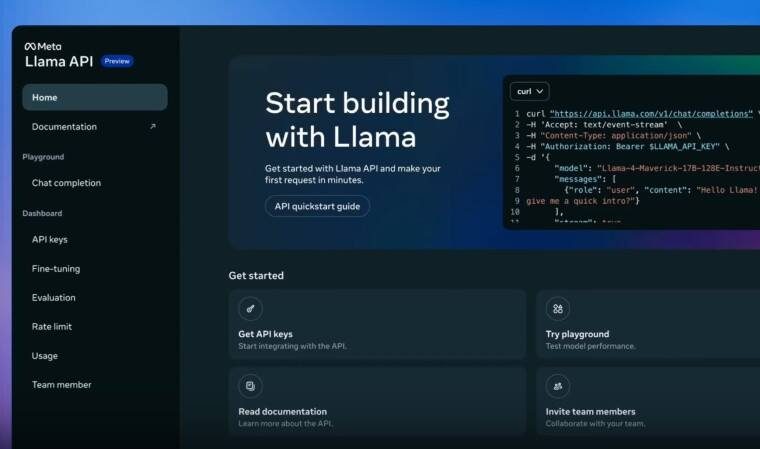

Getting Started with the API

Getting started with the Llama API involves several straightforward steps:

- Sign Up: Interested developers need to sign up via Meta’s developer portal.

- API Key Generation: After registration, users can generate an API key that enables interaction with different model options including those powered by Cerebras.

- Documentation Review: Familiarizing oneself with comprehensive documentation provided by Meta ensures effective utilization of all features offered through this new toolset.

- Experimentation Phase: Begin deploying test applications utilizing various Llama models tailored toward specific requirements or goals—whether it be creative writing aids or automated customer support bots.

By following these steps carefully, developers can unlock significant potential within their projects leveraging state-of-the-art AI technology.

Potential Use Cases for the Llama API

Applications in Business and Tech

The implications of integrating the Llama API into everyday business operations could be profound:

Enhanced Customer Support Solutions: Companies could develop chatbots capable of understanding context better than ever before thanks largely due speed improvements over traditional systems—leading directly towards improved customer satisfaction metrics.

Real-Time Analytics Tools: Utilizing high-speed inference capabilities allows organizations not just analyze data but react instantaneously as situations evolve—a game-changer particularly relevant during financial trading scenarios or crisis management efforts.

Streamlined Content Creation Processes: Marketing teams might leverage these advanced capabilities when generating targeted content quickly; automating repetitive writing tasks frees up valuable resources allowing professionals time needed for strategy development instead!

In essence, businesses looking forward may find themselves embracing opportunities previously deemed unattainable due technological constraints present priorly existing frameworks—the barriers are coming down!

Creative Uses for Developers

Beyond conventional business settings lies an equally compelling realm focused on artistic expression facilitated through innovative software solutions powered by platforms like Meta:

Interactive Storytelling Experiences: Developers could craft immersive narrative journeys where user choices directly influence plot outcomes—extending engagement far beyond static formats!

Game Development Enhancements: With improved processing speeds available via APIs such as these combined immersive gameplay mechanics become viable options leading players on adventures filled dynamic interactions rather than scripted sequences alone!

Generative Art Projects: Artists experimenting with machine learning techniques see fresh avenues arising from newfound abilities; crafting algorithmically derived visuals becomes less daunting when computational overhead reduces significantly via optimized infrastructure setups offered here!

As we delve deeper into exploring what possibilities await those who harness these advancements together—we can’t help but feel excitement brewing around each upcoming release cycle; collaboration breeds innovation after all!

Frequently asked questions on Llama API

What is the Llama API from Meta?

The Llama API is a new interface launched by Meta that provides developers with access to advanced AI models, allowing them to create custom applications leveraging the unique capabilities of the Llama series. This API aims to enhance creativity and efficiency in various applications while positioning Meta as a service-oriented player in the AI sector.

How can developers access the Llama API?

The Llama API is currently available through a limited preview. Developers interested in using it need to register for early access via Meta’s developer portal. This initial phase focuses on gathering feedback and refining functionalities before broader availability later this year.

What are some key features of the Llama API?

The Llama API boasts several standout features, including high-speed inference (up to 18 times faster than traditional GPU solutions), custom model support for tailored applications, data privacy assurance ensuring customer data isn’t used for training Meta’s models, and accessibility options that streamline development experiences.

What potential use cases exist for the Llama API?

The Llama API has numerous potential applications across business and tech sectors. It can enhance customer support through better chatbots, enable real-time analytics tools for instant data reaction, and streamline content creation processes. Additionally, it opens doors for creative uses like interactive storytelling and game development enhancements.

How does the Llama API compare to other AI APIs?

The Llama API distinguishes itself with its high-speed inference capabilities and commitment to data privacy, which sets it apart from competitors like OpenAI and Google. Its focus on customization also allows developers more flexibility in creating specific applications tailored to their needs.

Is there any cost associated with using the Llama API?

The pricing details for accessing the Lamma API have not been publicly announced yet, as it is still in a limited preview phase. More information regarding costs will likely be revealed once broader access becomes available.

Can I use my existing machine learning models with the Llama API?

Yes! The Lama API supports custom model integration, allowing developers to transfer their existing machine learning models onto this platform without facing vendor lock-in issues.

When will wider access to the Llama API be available?

A specific date hasn’t been announced yet for broader availability of the Lama API. However, it’s expected that once feedback from early users is gathered and adjustments are made, wider access will follow swiftly later this year.